The auditory cortex¶

new:

Bhaya-Grossman, I., Chang, E. F. 2021. Speech computations of the human superior temporal gyrus. Annual Review of Psychology.

Hamilton, L. S., Oganian, Y., Hall, J., Chang, E. F. 2021. Parallel and distributed encoding of speech across human auditory cortex. Cell 184: 4626-4639.e13.

Kraus, N. 2021. Descending control in the auditory system: A perspective. Frontiers in Neuroscience 15: 1351.

Anatomy of auditory cortex¶

Where is auditory cortex?¶

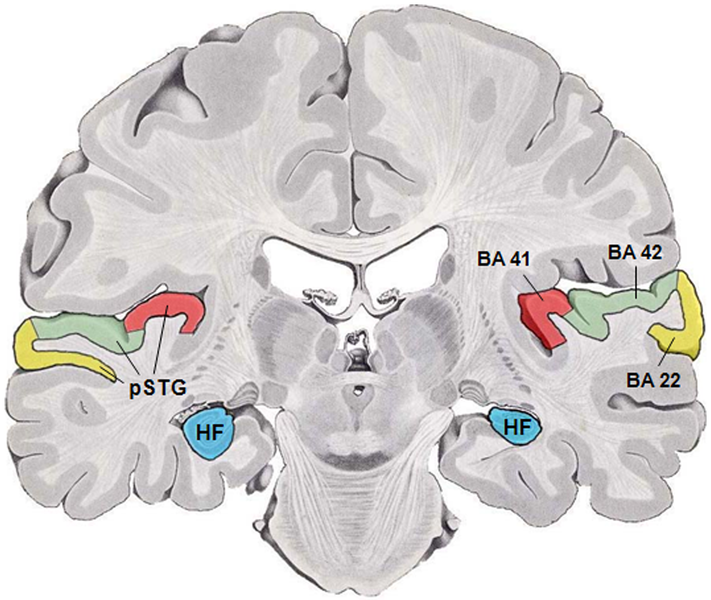

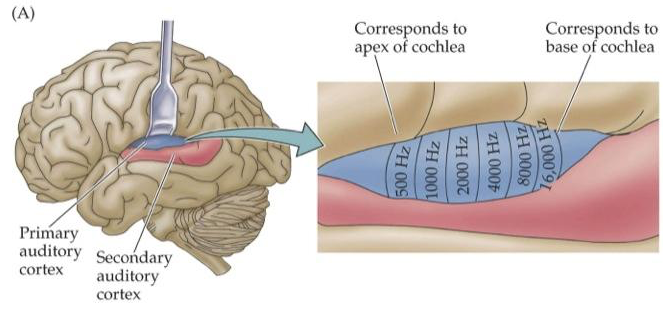

Recall that auditory cortex is found on the posterior superior temporal gyrus (pSTG), Brodmann’s areas 41 and 42:

Fig. 87 Brodmann’s areas 41 and 42, a first approximation of primary auditory cortex. [1]¶

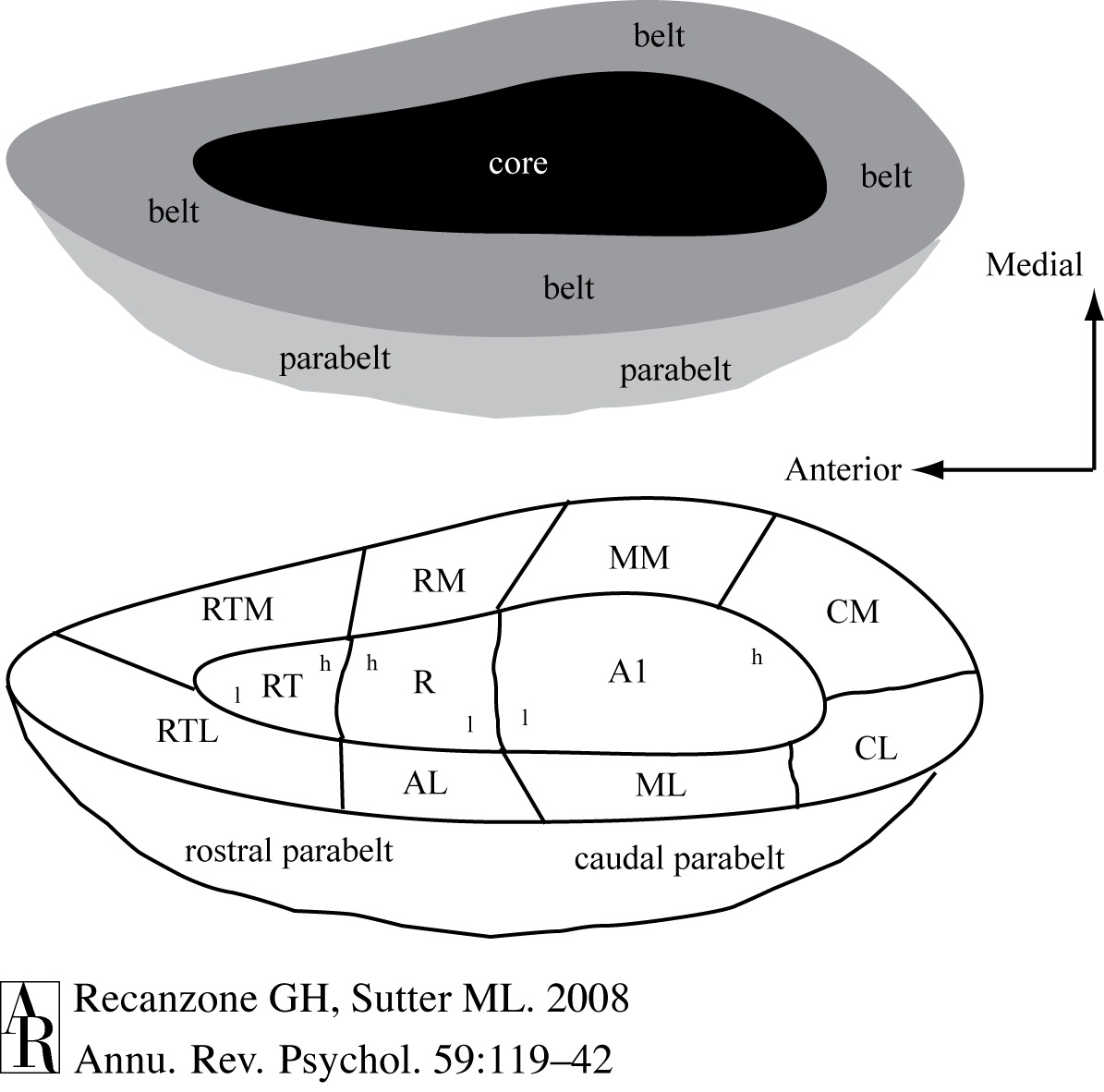

There is a problem with this conventional representation in that this area curves over and down into the other side of the lateral sulcus/Sylvian fissure, as the following pair of diagrams endeavor to show, as well as The tonotopic mapping of core auditory cortex. 7:

|

|

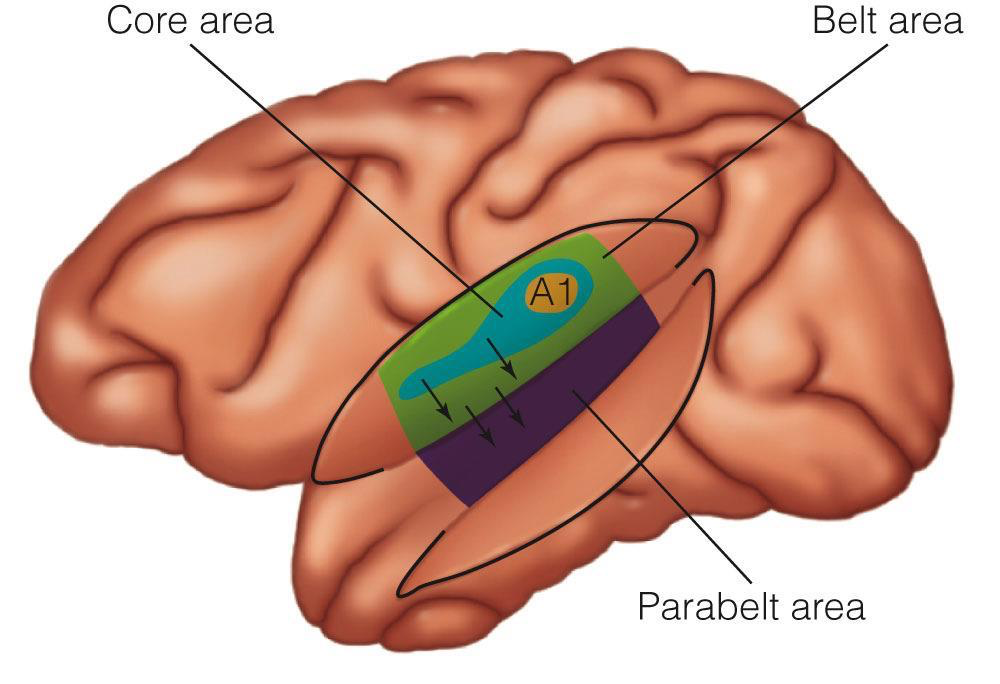

Auditory cortex has traditionally been subdivided into primary (A1), secondary (A2) and tertiary (A3) areas, though nowadays this terminology has been replaced by core, belt and parabelt, respectively. Here are two illustrations, without and with the context of the surrounding cortex:

|

|

What subcortical connections does it receive?¶

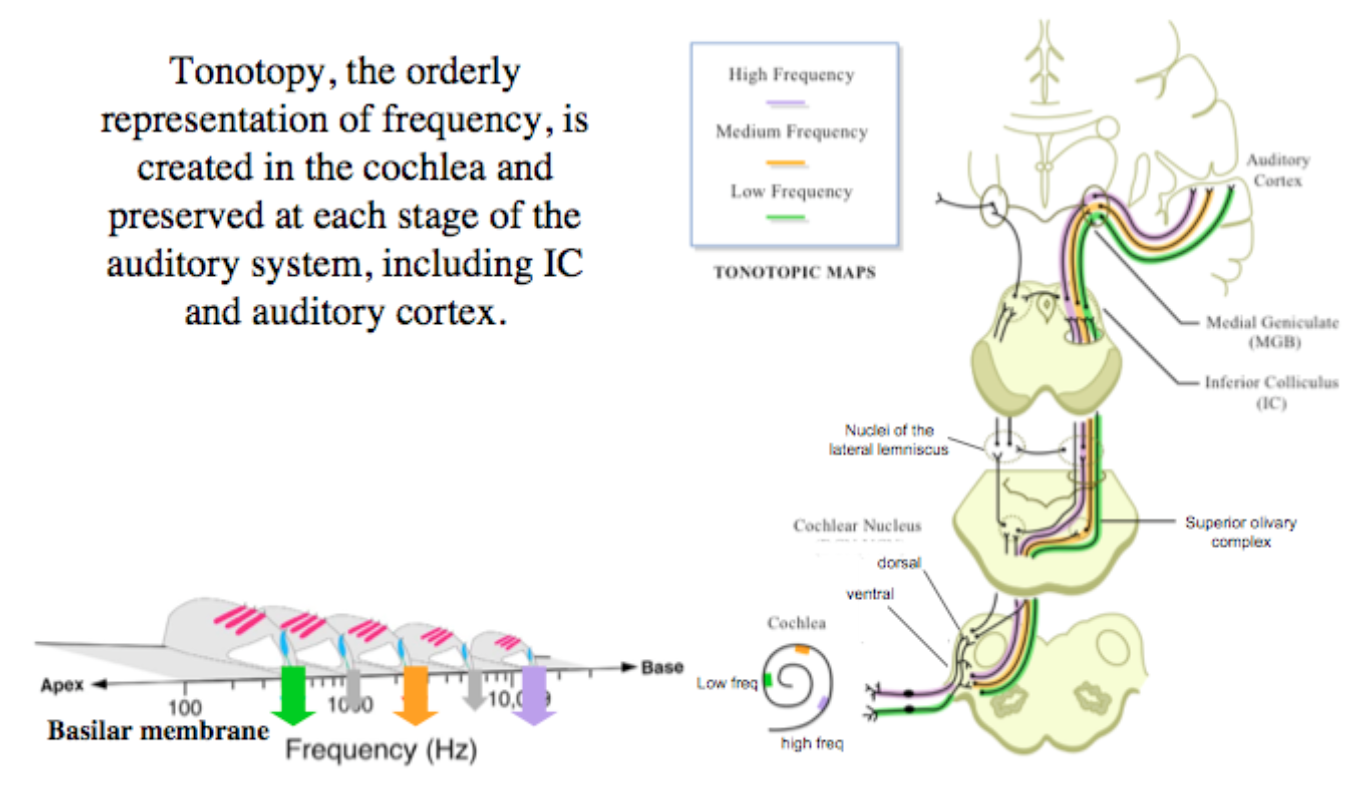

As is to be expected from the previous chapter, auditory cortex receives the tonotopic mapping of the cochlea:

Fig. 88 Maintenance of tonotopy through the central auditory pathway. [6]¶

And unfolds it across the core auditory cortex:

How does it fit into the big picture?¶

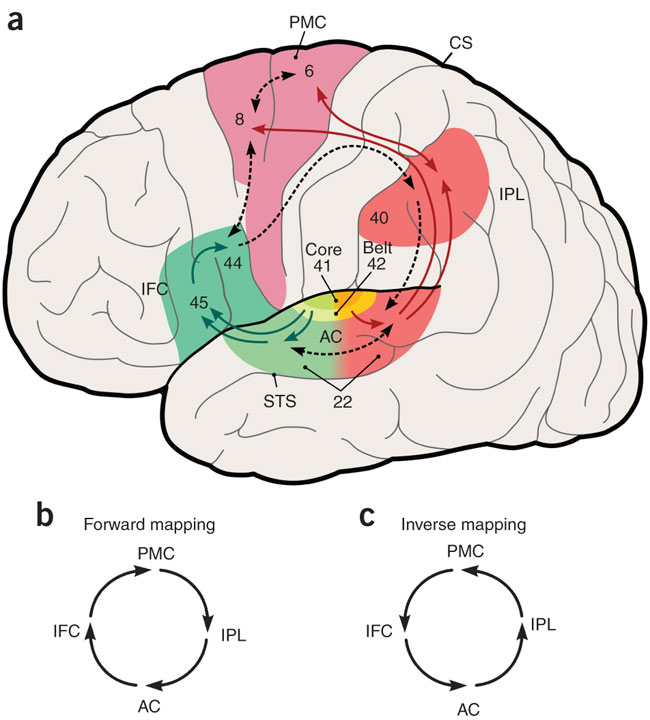

While not the exact model that I want to use, the following diagram illustrates how the auditory cortex sits within two streams of language processing:

Notice the

How does it work?¶

Does the brain make the distinction between between spectral and temporal frequency that I have just made?

The right-ear advantage for certain speech sounds¶

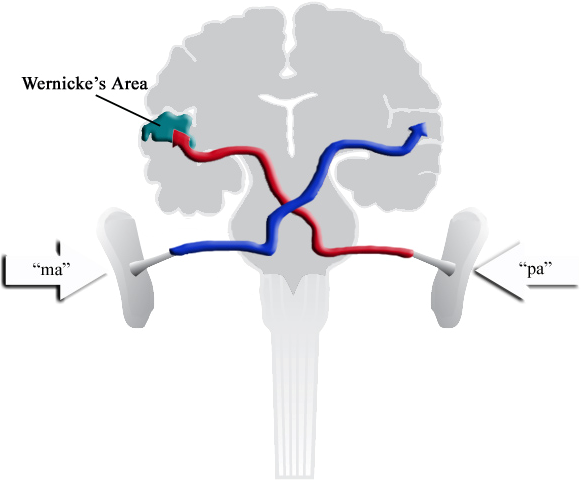

Recall that if a different speech sound is presented to each ear simultaneously, what will be perceived is the one heard by the right ear, because it connects directly to the left auditory cortex:

Fig. 91 “pa” is heard, rather than “ma” or a mixture of the two. [9]¶

From many experiments such as this one in the 1960s and 1970s the tendencies collected in Table 28 started to emerge:

Strong right-ear advantage |

Weak right-ear advantage |

No right-ear advantage |

|---|---|---|

stops (p,b,t,d,k,g) |

liquids (l,r), glides (y,w), fricatives (f,v,θ,ð,s,z,ʃ,ʒ) |

vowels |

short duration, fast change |

medium duration |

long duration |

In a more general sense, it started to dawn on researchers that speech recognition worked differently in either hemisphere.

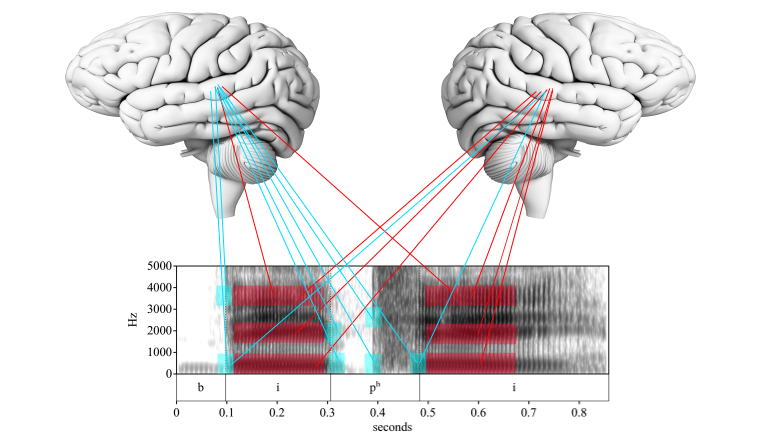

Two windows of temporal integration¶

This realization has been explained in various ways as evidence built up, but the most recent one that I will focus on here postulates a specialization of each hemisphere for temporal window size. The left hemisphere prefers the smaller, higher frequency window, while the right hemisphere prefers the larger, lower frequency window:

Fig. 92 Lateralization of temporal window size. Author’s image.¶

Some data¶

Prosody in the right hemisphere¶

Hopefully you recalled that the fundamental frequency is the lowest frequency that a voice can produce, which suggests that at least pitch is a low-frequency phenomenon and so should be processed in the right hemisphere.

It has been known since the 1970s that the right hemisphere dominates in the perception of prosody. Initial evidence thereof were the descriptions of lesions in the right hemisphere resulting in a pattern of aprosodias (deficits either in the expression or understanding of prosody) analogous to the well-documented pattern of left hemisphere lesions resulting in the various aphasias.

Emotional prosody¶

With respect to production, the speech of patients with right hemisphere lesions has been characterized as monotonous and unmodulated. For instance, Ross & Mesulam (1979) report a patient who had difficulty disciplining her children because they could not detect when she was upset or angry. She eventually learned to emphasize her speech by adding “I mean it!” to the end of her sentences.

With respect to perception, studies such as that of Tucker, Watson & Heilman (1977) asked people to identify semantically-neutral sentences that were intoned to convey happiness, sadness, anger, or indifference. Patients with right-hemisphere damage (RHD) were impaired on both identification and discrimination of such affective meanings, in comparison to both healthy controls (no brain damage or NBD) and patients with left-hemisphere damage (LHD).

Tones versus noise¶

Binder et al. [BFH+00] presented subjects with noise, tones, words, pseudo-words and reversed words while undergoing fMRI. The auditory cortex was more strongly activated for the tones than the noise, suggesting a role in the processing of simple auditory stimuli.

Planum temporale¶

[VBH+06]

Standard anatomical descriptions of white-matter bundles (Dejerine, 1980 and Nieuwenhuys et al., 1988), as well as recent tensor diffusion imaging (Parker et al., 2005), show that the arcuate fasciculus (also called the superior longitudinal fasciculus) connects the precentral and pars opercularis areas of the inferior frontal gyrus to the posterior part of the superior temporal gyrus, including the planum temporale (PT) and the lateral part of the superior temporal gyrus. PT involvement in syllable perception and voice onset time (VOT) processing, as assessed with intra-cerebral recordings (Liégeois-Chauvel et al., 1999), was demonstrated with fMRI by Jancke et al. (2002). This was confirmed by Joanisse and Gati who reported PT enrollment in consonant and tone sweep, both of which require the processing of rapid temporal characteristics of auditory signals (Joanisse and Gati, 2003). PT phonological specialization relative to semantic processing is also supported by reports of higher activity in this area during pseudo-word than in word detection in an auditory selective attention task (Hugdahl et al., 2003). Its anatomo-functional leftward hemispheric specialization is expressed by the fact that the PT exhibits a larger surface area in the left hemisphere (Geschwind and Levitsky, 1968) and shows a larger functional involvement during right-ear presentation than during dichotic presentation of syllables (Jancke and Shah, 2002).

In most cases, left PT activation is observed during auditory stimulus presentation, but tasks based on visually presented letter assembly can also elicit PT activation (Jessen et al., 1999, Paulesu et al., 2000 and Rypma et al., 1999). Participation of a unimodal associative auditory area in the absence of an auditory stimulus was confirmed by Hickok et al., who reported co-activation of the PT and the posterior superior temporal gyrus in the temporal lobe during both speech listening and covert speech production (Buchsbaum et al., 2001, Hickok et al., 2003 and Okada et al., 2003), together with the motor regions, i.e., the inferior precentral gyrus and the frontal operculum in the frontal lobe. The perception–action cycle that is supported by these fronto-temporal areas connected through the arcuate fasciculus fibers (Fig. 2, bottom) permits the implementation of a motor-sound-based, rather than pure-sound-based, phoneme representation (Hickok and Poeppel, 2004). In such a model, articulatory gestures are the primary and common objects on which both speech production and speech perception develop and act, in agreement with Liberman’s motor theory of speech (Liberman and Whalen, 2000).

Disorders of auditory cortex: cortical deafness¶

[WC83] described a patient with bilateral lesions of the auditory cortex who was unable to hear any sounds, but had no apparent damage to the hearing apparatus and labeled the disorder cortical deafness. One review of the literature, reported “only 12 cases of significant deafness due to purely cerebral pathology” ([GGL80], p. 43).

Identifying cases of cortical deafness has proved to be difficult for several reasons:

patients rarely suffer bilateral lesions in auditory cortex;

some patients believed to suffer from cortical deafness may, in fact, suffer from auditory inattention or neglect;

cortical deafness is often transient, resolving to a less severe, or more specific, auditory processing disorder such as pure word deafness or auditory agnosia.

Powerpoint and podcast¶

The next topic¶

The next topic is the phonological network at Superior temporal sulcus.

End notes

Last edited Aug 18, 2025